A More Elegant Approach Emerges

The original article below discusses a number of approaches for getting your AWS credentials onto an EC2 instances. They all work but they all have significant shortcomings.AWS recently announced some new capabilities that provide a more elegant and more secure way to address this problem. Using IAM Roles for EC2 Instances you can create new IAM entity called a Role. A Role is similar to a User in that you can assign policies that control access to EC2 services and resources. However, unlike Users, a Role can only be used when the Role is "assumed" by an entity like an EC2 Instance. This means you can create a Role, associate policies with it, and the associate that Role with an instance when it is launched and a temporary set of credentials will magically be made available in the instance metadata of the newly launched instance. These temporary credentials have relatively short life spans (although they can be renewed automatically) and, since they are IAM entities, they can be easily revoked.

In addition, boto and other AWS SDK's, understand this mechanism and can automatically find the temporary credentials, use them for requests and handle the expiration. Your application doesn't have to worry about it at all. Neat!

The following code shows a simple example of using IAM Roles in boto. In this example, the policy associated with the Role allows full access to a single S3 bucket but you can use the AWS Policy Generator to create a policy for your specific needs.

Once the instance is launched, you can immediately start using boto and it will find and use the credentials associated with the Role. I think this is a huge improvement over all of the previous techniques and I recommend it.

Original Content

In Part 1 I discussed the basics of AWS credentials, such as what they are, where to find them and how to protect them. In this installment, I'm going to talk about some of the real world challenges you face when deploying production systems in AWS. Finally, I'll talk about some strategies for dealing with those challenges.

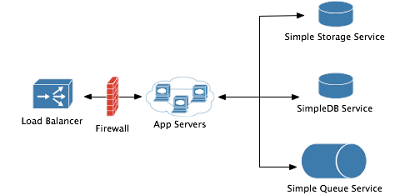

The diagram above shows a configuration that is similar to many production systems we have deployed. It consists of a load-balancing front end, and a scalable set of application servers that handle user requests. The load-balancing can be down in one of two ways:

- A scalable number of EC2 instances, spread across availability zones, running reverse-proxy software like Apache mod_proxy, HAProxy or NGinX. Each of the instances would have an Elastic IP address attached and you would create a DNS A-record for each Elastic IP address. Then, you could use DNS round-robin load balancing to spread requests across your front end servers.

- Elastic Load Balancer, one of the new services from AWS, which takes the place of the EC2 instances and DNS magic described above and just provides a DNS CName to which all traffic destined for your application should be sent. ELB then manages the scaling of the front end and the load balancing across your application servers.

That raises two interesting questions.

How do the AWS credentials get installed on the applications servers?

One way you could make the credentials available to the application servers is to bundle the credentials into the AMI used for the application servers. That would work but it's a pretty bad idea. First of all, it means that if you ever have to change the credentials you also have to bundle a new AMI. Yuck. Secondly, it creates too many opportunities for errors. For example, someone might rebundle the AMI for a different purpose and forget to remove the credentials. Or, you might inadvertently share the AMI with another AWS user or even accidentally make it public and not even realize your mistake for a while. In the meantime, anyone who would have launched the instance could have found the credentials. Yuck, again.

A better approach would be to pass the credentials to the AMI in the user_data that you can supply when you launch an instance. In this way, the credentials are passed as a parameter to the AMI rather than having them baked in which makes things a lot more flexible and at least a little bit more secure. There are some other options available, but I'll save those for Part 3 in the series.

What risks do I incur by having them there?

This is where we get to the "waking up in a cold sweat in the middle of the night" part of the article. If you put valuable information on a server, you have to acknowledge that there is at least some risk that a baddie will find that valuable information, despite your best efforts to thwart him/her. So, having accepted that possibility, what's the worst that could happen?

Well, if you have one set of AWS credentials for all production services (e.g. EC2, S3, SimpleDB, SQS, ELB, etc.) and if those credentials are discovered by a baddie, then the worst that could happen is very, very bad indeed. With your production credentials in their hot little hands, the baddie can:

- Terminate all EC2 instances

- Access all customer data stored in S3 or SimpleDB

- Delete all data stored in S3 or SimpleDB

- Start up a bunch of new EC2 instances to run up charges on your AWS account

- Use your account to attack other sites

- Lot's of other things that I'm not sneaky enough to even imagine

Multiple Personalities

The best way I have found of mitigating the risk of having your AWS credentials discovered and exploited is to use two sets of AWS credentials for managing your production environment. Let's call them your Secret Credentials and your Double Secret Credentials.

Secret Credentials

These are the credentials that would be used on the application servers in our diagram above. When creating these credentials, you should sign up only for the services that are absolutely required. In our example, that would include S3, SimpleDB and SQS but not, for example, EC2. You should make sure you choose a different, but equally secure password for the AWS Account Credentials (see Part 1).

Double Secret Credentials

The Double Secret Credentials are just that, doubly secret. These credentials should never, ever be present on a publicly accessible server! These are the credentials that you would use to start and stop all production EC2 instances and create and manage your Elastic Load Balancers and CloudWatch/AutoScaling groups. In addition, these are the credentials you would use to create the S3 buckets that contain your production data and to create any SQS queues you would need for batch processing. You would then use the Access Control Mechanisms of these services to grant the necessary access to the Secret Credentials.

For SQS, this is quite straightforward if you are using the new 2009-02-01 API. This API includes a powerful new ACL mechanism that gives you a great deal of flexibility in granting access to queues. For example, you could grant access to write to a queue but not read from it, read from it but not write to it, you can even limit access by IP address and/or time of day.

For S3, you have fewer options. If the application servers only require read access to S3 resources, then that can easily be accomplished with the ACL mechanism in S3 by granting READ access. If the app servers need to be able to write content to S3 (e.g. for uploading files) you would have to grant the Secret Credentials WRITE access to the S3 bucket. But that also means that all content written to the bucket by Secret Credentials would also be owned by Secret Credentials and could therefore also be read, deleted or overwritten by Secret Credentials.

Actually, that last sentence is not completely correct. In fact, if Secret Credentials has WRITE access (and only WRITE access) to a bucket owned by Double Secret Credentials, Secret Credentials will be able to read, delete or overwrite any content owned by Secret Credentials in that bucket AND will be able to delete or overwrite any content even if owned by Double Secret Credentials. It's sort of weird that keys can be deleted even if they cannot be read or listed but that is how S3 operates.

MSG - 6/25/2009 (Thanks to Allen on S3 Forums for the correction)

If that's unacceptable, the best approach is to have Secret Credentials write uploaded data to an intermediate bucket, send a message to indicate there is new content and then have Double Secret Credentials MOVE that content into the production S3 bucket.

For SimpleDB, you have even fewer options. There is currently no ACL capability in SimpleDB. So, if Secret Credentials needs any access to SimpleDB, it must own the SimpleDB domain and no other account can have direct access to it. The best approach I've found is to keep a reasonably current backup of the data in Secret Credential's SimpleDB domain(s), either by copying the data periodically to a domain owned by Double Secret Credentials or by dumping the data to an S3 bucket. Obviously, as the amount of SimpleDB data grows, this approach becomes less and less manageable. I am hoping that the flexible ACL mechanism recently introduced in SQS will eventually make it to SimpleDB but for now you have to resort to brute force safety measures.

Breathing Easier?

If we follow this basic strategy, most of the scorched earth scenarios described above can be avoided. There are still risks of unauthorized access to data and, depending on the data you are storing, you may need to consider further safeguards such as encryption of the data at rest as well as in flight. That introduces another level of complexity, especially around key management, but in some cases it's the only responsible approach.

The details of your security approach will depend on your application and the type of data you are storing but hopefully this installment provides a basic strategy for minimizing some of the risks described above. A future installment will describe some software tools that can be used to further automate and secure your application within AWS.

Thanks for this great article. I'm eagerly awaiting Part 3.

ReplyDeleteThe best solution I've come up with is to use an AWS proxy service over HTTPS. The proxy will have your real credentials and will provide the same API that the AWS services do (which will allow existing tools to work with only a change of endpoint URL).

The proxy service will need to be notified whenever an instance launches, and it will need to know the instance's private IP address. When a new instance launches, the proxy can install a newly-generated, unique set of fake AWS credentials on the instance. Requests from terminated instances, from non-registered IP addresses, or signed with unrecognized credentials will be denied.

Given the above, proxy will be able to authenticate the instance and perform AWS actions on its behalf. The real keys are not on the instance, so a compromised instance does not reveal them. If an instance is compromised then it can still wreak havoc by using its fake credentials to access the proxy - but the fake credentials can be revoked at the proxy (and, if warranted, a policy of expiring each instance's fake credentials after a certain elapsed time can be enforced). Another unregistered (hostile) instance cannot pretend to be your instance because it would have the wrong private IP address/fake credentials combination.

The issue then becomes how to securely add a hook into to the launch sequence. Perhaps the same proxy service can be used for that: the proxy service can, when it launches an instance, generate the fake credentials and put them (via a user-data script?) onto the instance. The only hole to plug is to authorize the original client (your local machine) to use the proxy, which can be done manually when setting up the proxy.

I'm sure there are more angles to think about...

Just an update:

ReplyDeleteAWS announces the Identity and Access Management service (IAM) which allows creation of limited-privilege credentials:

http://aws.amazon.com/iam/

No more proxy needed.